The 70 teams hailed from all over: North America, Europe, Asia, the Middle East, South America, Australia.

Far-flung as they were, each was given the same assignment: Examine collections of irregular, blotchy shapes that formed seemingly abstract patterns, then describe what they saw and derive meaning from the muddle. With enough repetition and informed interpretation, surely those patterns could help tease apart what was going on inside their heads.

Rorschach tests, right? Try again.

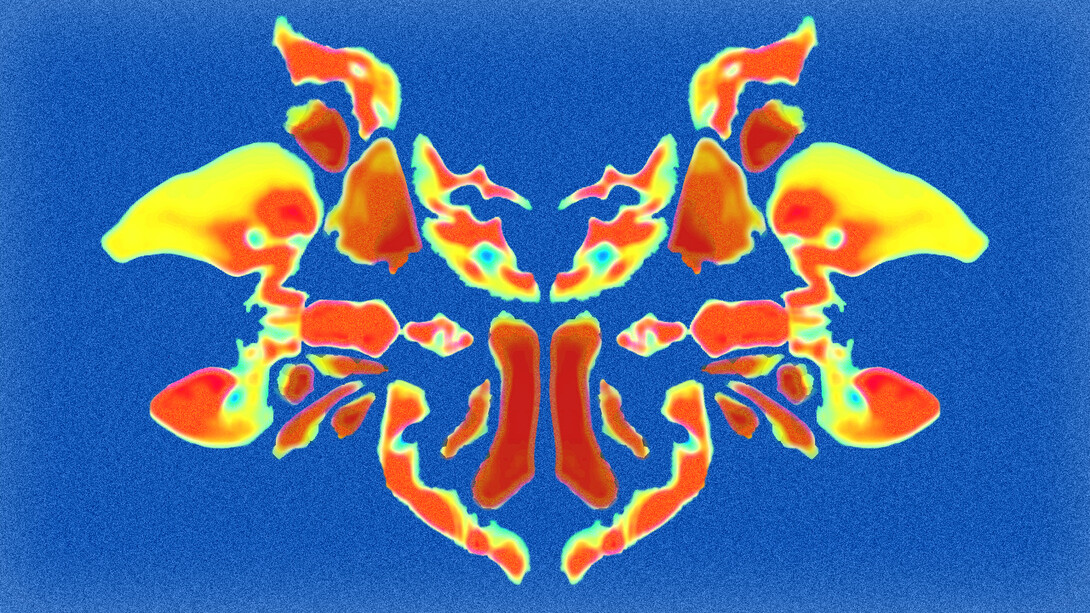

The University of Nebraska–Lincoln’s Doug Schultz and his fellow participants were not the analyzed but the analyzers. And what they analyzed were functional MRIs, which track changes in blood flow and assign corresponding colors to indicate areas of the brain that are active or at rest. As the predominant method of brain mapping, fMRI has helped researchers investigate questions of cognition, emotion and function since its current form emerged in the early 1990s.

The study presented each team with identical fMRI readouts from people whose brains were mapped while engaging in gambling tasks. The teams were then asked to answer nine hypotheses of the yes-no variety: When participants lost a bet, for instance, did their brains show activity in the ventromedial prefontal cortex? Every team was also required to show its work, effectively laying out the branching decision trees that led to its conclusions.

Of the 70 teams, no two followed exactly the same analytical and statistical path to answering the hypotheses. That variability in process manifested itself in the results, too. Though at least 84% of teams agreed on four of the hypotheses, the other five hypotheses generated consensus that ranged from just 63% to 79% of teams. On a per-hypothesis basis, 20% of teams diverged from the consensus.

That divergence would seem to partly account for fMRI’s so-called replication crisis — the inability of teams to consistently reproduce results reached by the original researchers. Though far from being exclusive to fMRI or even psychology, the issue was prevalent enough to compel researchers at Tel Aviv University, Stanford University and the University of Oxford to recruit worldwide participation in the study.

Schultz and colleague Matt Johnson, both assistant professors of psychology at Nebraska, took note and, alongside a postdoctoral researcher and graduate students, decided to take part.

“There’s been a movement the last few years to try to improve the replicability of these findings,” Schultz said. “Obviously, a lot of time and effort and money goes into funding these studies, and the more accurate data we get through our analyses, the more accurate the conclusions we draw. We increase the efficiency of the entire system, and we don’t have some dead ends that are created by faulty results in the first place.”

As a member of the university’s Center for Brain, Biology and Behavior, Schultz has relied on fMRI to study how brain networks communicate when pursuing a goal and examine how the brain operates when trying to regulate emotion. That experience, he said, has revealed myriad reasons that fMRI leaves ample room for interpretation.

Reason No. 1? The sheer number of decisions and steps involved in what is an inherently involved process, Schultz said. During the study — just as they would be in conducting their own studies — teams were free to decide what magnitude of blood flow actually constituted statistically meaningful brain activity.

As might be expected, the flexibility of those thresholds meant that the maps incorporating them yielded less consensus than did the maps that simply output raw statistical data. To help mitigate those effects and stimulate discussion on assumptions, Schultz and his co-authors have advocated for including unvarnished fMRI readouts when reporting findings.

“Different groups can use different approaches to deal with that multiple-comparison problem, and that can result in pretty dramatically different thresholded maps,” Schultz said. “So I think that that’s probably where a fair amount of variability comes from.”

As he was keen to point out, though, plenty of other divergence-driving decisions can emerge. Typically, researchers accept that if a change in blood flow is less than 5% likely to have occurred by chance, it’s statistically meaningful. But again, Schultz said, sheer numbers can overwhelm the best intentions. An fMRI tracks and records blood flow in cubes of space called voxels, essentially the 3D equivalent of a pixel. And a single fMRI can contain up to 100,000 voxels.

“If we’re testing 100,000 things,” he said, “and our false positive rate is 5%, that still gives us a lot of voxels that aren’t really activated that we would show as activated simply by chance.”

Some teams address the potential problem by lowering that false-positive threshold to, say, 3% or 1%. Others disregard outlier voxels that are off on their own, including only those surrounded by a certain number of neighbors.

That’s all before getting into logistical issues and the choices they spawn. Brains are rarely the same size or shape, so comparing them requires standardizing those variables — a process that, ironically, can be done to varying degrees and in any number of ways. There’s also the fact people don’t hold their heads perfectly still while undergoing an MRI, which introduces its own complications. If a person’s head shifted two millimeters during a certain frame, should the data be kept or discarded? Is that distance more or less important than the number of shifts? Just how many of those are acceptable?

Making those considerations and decisions publicly available on a more regular basis, Schultz said, rates as a relatively easy but substantial step toward addressing replicability issues.

“All these things are parts of a pipeline — a series of processing steps from the beginning, when you get ones and zeros off the scanner, to the end, when you have a typical map of the brain and are making some kind of group-level inference,” Schultz said. “There are a lot of points in that pipeline where you can make different decisions.

“I think this points to the importance of saying: Any one result, you have to be really careful in evaluating it. You have to look at some of the details — some of those processing choices that were made — and how that might influence their results and their conclusions.”

Still, Schultz said he does see value in the proliferation of different pipelines, even beyond the meta context of the Nature study. That’s especially so given that fMRI analysis is still in its adolescence, he said, and probably won’t see the emergence of a “gold standard” anytime soon.

“If, five years down the road, we figure out there was a big problem with this (certain) processing step — well, if everyone used it, everything is problematic,” he said. “Whereas if you have different people doing things with slight variations, maybe certain studies are somewhat protected from that problem.”

And the fact that the study revealed even as much overlap as it did, while peeling back some of the issues likely at the core of the replication crisis, has left Schultz feeling relatively optimistic about the future of fMRI.

“It would be potentially easier to look at the results and say, ‘Look, everybody’s doing something different, and all the results are changing. Who knows what any of this means?’” he said. “I would argue that we have identified some consistent results here, and we are moving things forward, which I think is ultimately what the goal of all science should be.

“This is an evolving field, and we’re still trying to figure out better ways to do everything.”